This example shows how to use GPUs in OpenFPM step by step. To start to use GPUs with vectors_dist, this example is a good starting point. On the other hand we suggest to read the example simple_vector_example} before this example.

While a cpu data-structure can be created with vector_dist a gpu data-structure can be created with vector_dist_gpu. The GPU vector_dist expose the same CPU interface with additional functionalities. This means that a vector_dist can be changed to vector_dist_gpu without changing a single line of code. This is an important feature because give us the possibility to change our code from CPU to GPU incrementally step-by-step. A small sections of code can be moved to GPU leaving the rest unchanged. The documentation of vector_dist_gpu is the same ad vector_dist, the extended functionality is documented in vector_dist. Every file containing vector_dist_gpu must be compiled with nvcc compiler, so we suggest to use the extension *.cu for such files and add a rule to compile cu files using nvcc. An example of how to do it can be seen checking the Makefile in this example.

While changing from vector_dist to vector_dist_gpu, seem not producing any effect. There are some underling change that take effect:

This code snippet below shows how vector_dist_gpu can be used like vector_dist in simple_vector_example}. In short we create a set of 100 particles (vector_dist_gpu) in 2d from {0.0,0.0} to {1.0,1.0}. Particles are randomly placed in such space. The final map redistribute such particles accordingly to the decomposition.

To offload data to GPU you can use the function hostToDevicePos to offload particle position and hostToDeviceProp to offload properties data. This latest function take template parameters to specify which properties to offload. Here we offload all the properties (scalar,vector,tensor)

Once the data has been offload we can launch a kernel to do some computation. All data-structure gpu ready has a function called toKernel that give the possibility to pass the data-structure to the kernel and be used inside the kernel, like is used on CPU. Lanuching a kernel on cuda require the subdivision of of a loop in workgroups and threads in the workgroups. OpenFPM provides the function getDomainIteratorGPU to automatically split the domain particle loop. The members wthr and thr can be used in the <<<...>>> brachet to launch a CUDA kernel.

The kernel is the definition of a normal CUDA kernel. We use template parameters for every parameter that is passed with toKernel()

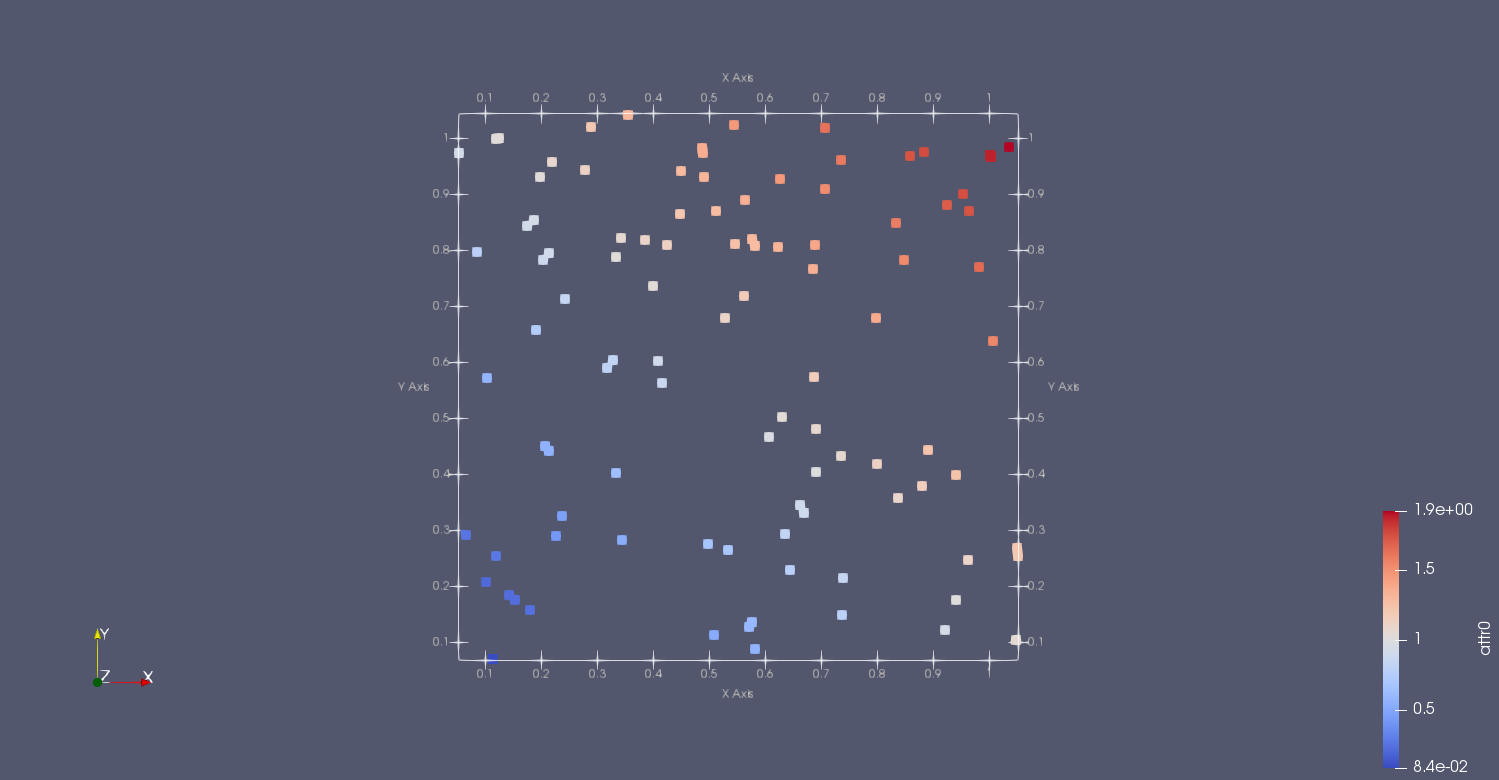

The kernel simply shift the particles by 0.05. Set the scalar properties to the sum of x and y of the "old" particle position, set the vector properties to the old particle position, and set the tensor to several combination of x and y "old" particle position

Once the computation is completed we can ask to reoffload the data from device to host and write the results to file.

Until here we saw how to move data from host to device, device to host and how to launch a CUDA kernel on off-loaded data. As previously mentioned vector_dist_gpu has the same CPU interface and so provide the standard function map and ghost_get that work on host pinned memory. Because we want to avoid to move data from GPU to host memory. To avoid it we can use map with the option RUN_DEVICE to redistribute the particles directly on GPU, and ghost_get with RUN_DEVICE to fill ghost particles directly on GPU. In the loop below we see how we can use map on a particle set that is already on GPU. In particular we never offload particles on CPU to do map or ghost_get. We use the kernel translate_fill_prop, to translate the particles and update the properties. The only offload happen every 10 time-step to write on file.

Today MPI implementations are able to do RDMA on GPU memory. This in practice mean that Infiniband card can directly read GPU memory transfer over infiniband and write on the other node directly on GPU, without moving the data to system memory. In practice means that MPI calls can work directly on CUDA device pointers. OpenFPM can exploit this feature if MPI is compiled with CUDA support. To check if MPI is compiled with CUDA support use the function is_mpi_rdma_cuda_active()

It is good to note that in order to work (return true), some condition must be met.

When we want to launch a kernel "my_kernel" on CUDA we in general use the Nvidia CUDA syntax

my_kernel<<<wthr,thr>>>(arguments ... )

Where wthr is the number of workgroups and thr is the number of threads in a workgroup and arguments... are the arguments to pass to the kernel. Equivalently we can launch a kernel with the macro CUDA_LAUNCH_DIM3(my_kernel,wthr,thr,arguments...) or CUDA_LAUNCH(my_kernel,ite,arguments) where ite has been taken using getDomainIteratorGPU. There are several advantage on using CUDA_LAUNCH. The first advantage in using the macro is enabling SE_CLASS1 all kernel launch become synchronous and an error check is performed before continue to the next kernel making debugging easier. Another feature is the possibility to run CUDA code on CPU without a GPU. compiling with "CUDA_ON_CPU=1 make" (Note openfpm must be compiled with GPU support (-g) or with CUDA_ON_CPU support (-c "... --enable_cuda_on_cpu"). You can compile this example on CPU. You do not have to change a single line of code for this example. (Check the video to see this feature in action). All the openfpm GPU example and CUDA example can run on CPU if they use CUDA_LAUNCH as macro. We are planning to support AMD GPUs as well using this system.