Solving a gray scott-system in 3D using Sparse grids on GPU optimized

This example show how to solve a Gray-Scott system in 3D using sparse grids on gpu (optimized). The problem is the same as Gray Scott in 3D

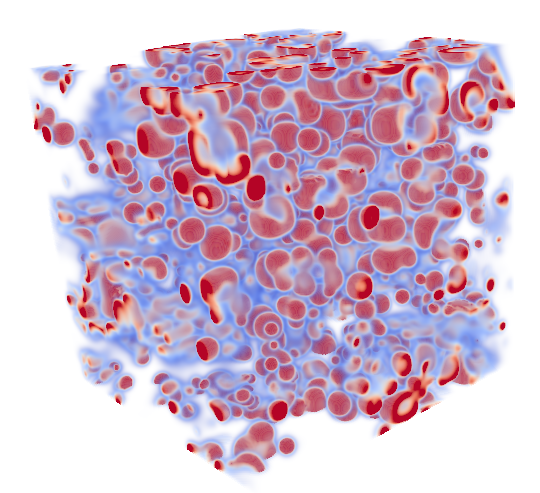

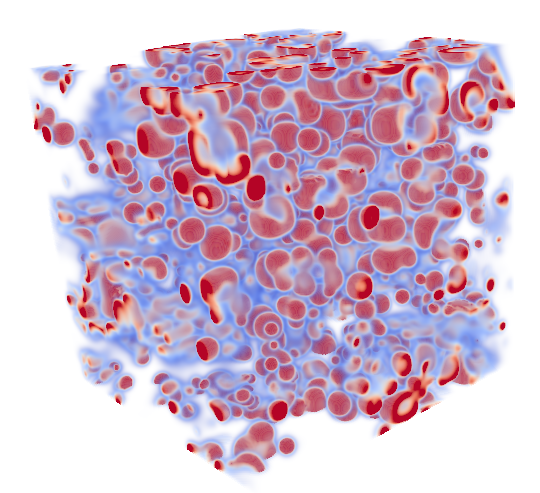

In figure is the final solution of the problem

More or less this example is the adaptation of the dense example in 3D

- See also

- Gray Scott in 3D

Finalize

Deinitialize the library

Full code

#include "Grid/grid_dist_id.hpp"

#include "data_type/aggregate.hpp"

#include "timer.hpp"

constexpr int U = 0;

constexpr int V = 1;

constexpr int x = 0;

constexpr int y = 1;

constexpr int z = 2;

{

auto it = Old.getGridIterator();

while (it.isNext())

{

auto key = it.get_dist();

Old.template insert<U>(key) = 1.0;

Old.template insert<V>(key) = 0.0;

New.template insert<U>(key) = 0.0;

New.template insert<V>(key) = 0.0;

++it;

}

long int x_start = Old.size(0)*1.55f/domain.getHigh(0);

long int y_start = Old.size(1)*1.55f/domain.getHigh(1);

long int z_start = Old.size(1)*1.55f/domain.getHigh(2);

long int x_stop = Old.size(0)*1.85f/domain.getHigh(0);

long int y_stop = Old.size(1)*1.85f/domain.getHigh(1);

long int z_stop = Old.size(1)*1.85f/domain.getHigh(2);

auto it_init = Old.getGridIterator(start,stop);

while (it_init.isNext())

{

auto key = it_init.get_dist();

Old.template insert<U>(key) = 0.5 + (((double)std::rand())/RAND_MAX -0.5)/10.0;

Old.template insert<V>(key) = 0.25 + (((double)std::rand())/RAND_MAX -0.5)/20.0;

++it_init;

}

}

int main(int argc, char* argv[])

{

openfpm_init(&argc,&argv);

size_t sz[3] = {128,128,128};

double deltaT = 1;

double du = 2*1e-5;

double dv = 1*1e-5;

#ifdef TEST_RUN

size_t timeSteps = 200;

#else

size_t timeSteps = 5000;

#endif

double K = 0.053;

double spacing[3] = {Old.spacing(0),Old.spacing(1),Old.spacing(2)};

size_t count = 0;

Old.template ghost_get<U,V>();

double uFactor = deltaT * du/(spacing[x]*spacing[x]);

double vFactor = deltaT * dv/(spacing[x]*spacing[x]);

Old.write("Init_condition");

auto & v_cl = create_vcluster();

for (size_t i = 0; i < timeSteps; ++i)

{

if (v_cl.rank() == 0)

{std::cout << "STEP: " << i << std::endl;}

auto it = Old.getDomainIterator();

while (it.isNext())

{

auto Cp = it.get();

auto mx = Cp.move(0,-1);

auto px = Cp.move(0,+1);

auto my = Cp.move(1,-1);

auto py = Cp.move(1,1);

auto mz = Cp.move(2,-1);

auto pz = Cp.move(2,1);

New.insert<U>(Cp) = Old.get<U>(Cp) + uFactor * (

Old.get<U>(mz) +

Old.get<U>(pz) +

Old.get<U>(my) +

Old.get<U>(py) +

Old.get<U>(mx) +

Old.get<U>(px) -

6.0*Old.get<U>(Cp)) +

- deltaT * Old.get<U>(Cp) * Old.get<V>(Cp) * Old.get<V>(Cp) +

- deltaT *

F * (Old.get<U>(Cp) - 1.0);

New.insert<V>(Cp) = Old.get<V>(Cp) + vFactor * (

Old.get<V>(mz) +

Old.get<V>(pz) +

Old.get<V>(my) +

Old.get<V>(py) +

Old.get<V>(mx) +

Old.get<V>(px) -

6*Old.get<V>(Cp)) +

deltaT * Old.get<U>(Cp) * Old.get<V>(Cp) * Old.get<V>(Cp) +

- deltaT * (

F+K) * Old.get<V>(Cp);

++it;

}

Old.copy_sparse(New);

Old.ghost_get<U,V>();

if (i % 500 == 0)

{

Old.save("output_" + std::to_string(count));

count++;

}

}

std::cout <<

"Total simulation: " << tot_sim.

getwct() << std::endl;

openfpm_finalize();

}

This class represent an N-dimensional box.

This is a distributed grid.

grid_key_dx is the key to access any element in the grid

Class for cpu time benchmarking.

void stop()

Stop the timer.

void start()

Start the timer.

double getwct()

Return the elapsed real time.

OutputIteratorT OffsetT ReductionOpT OuputT init

< [in] The initial value of the reduction

[v_transform metafunction]

aggregate of properties, from a list of object if create a struct that follow the OPENFPM native stru...

Finalize

Deinitialize the library

Full code

#include "Grid/grid_dist_id.hpp"

#include "data_type/aggregate.hpp"

#include "timer.hpp"

constexpr int U = 0;

constexpr int V = 1;

constexpr int x = 0;

constexpr int y = 1;

constexpr int z = 2;

{

auto it = Old.getDomainIterator();

while (it.isNext())

{

auto key = it.get();

Old.template get<U>(key) = 1.0;

Old.template get<V>(key) = 0.0;

New.template get<U>(key) = 0.0;

New.template get<V>(key) = 0.0;

++it;

}

long int x_start = Old.size(0)*1.55f/domain.getHigh(0);

long int y_start = Old.size(1)*1.55f/domain.getHigh(1);

long int z_start = Old.size(1)*1.55f/domain.getHigh(2);

long int x_stop = Old.size(0)*1.85f/domain.getHigh(0);

long int y_stop = Old.size(1)*1.85f/domain.getHigh(1);

long int z_stop = Old.size(1)*1.85f/domain.getHigh(2);

auto it_init = Old.getSubDomainIterator(start,stop);

while (it_init.isNext())

{

auto key = it_init.

get();

Old.template get<U>(key) = 0.5 + (((double)std::rand())/RAND_MAX -0.5)/10.0;

Old.template get<V>(key) = 0.25 + (((double)std::rand())/RAND_MAX -0.5)/20.0;

++it_init;

}

}

int main(int argc, char* argv[])

{

openfpm_init(&argc,&argv);

size_t sz[3] = {128,128,128};

double deltaT = 1;

double du = 2*1e-5;

double dv = 1*1e-5;

size_t timeSteps = 5000;

double K = 0.053;

double spacing[3] = {Old.spacing(0),Old.spacing(1),Old.spacing(2)};

size_t count = 0;

Old.template ghost_get<U,V>();

double uFactor = deltaT * du/(spacing[x]*spacing[x]);

double vFactor = deltaT * dv/(spacing[x]*spacing[x]);

{0,0,-1},

{0,0,1},

{0,-1,0},

{0,1,0},

{-1,0,0},

{1,0,0}};

for (size_t i = 0; i < timeSteps; ++i)

{

if (i % 300 == 0)

{std::cout << "STEP: " << i << std::endl;}

auto it = Old.getDomainIteratorStencil(star_stencil_3D);

while (it.isNext())

{

auto Cp = it.getStencil<0>();

auto mx = it.getStencil<1>();

auto px = it.getStencil<2>();

auto my = it.getStencil<3>();

auto py = it.getStencil<4>();

auto mz = it.getStencil<5>();

auto pz = it.getStencil<6>();

New.

get<U>(Cp) = Old.get<U>(Cp) + uFactor * (

Old.get<U>(mz) +

Old.get<U>(pz) +

Old.get<U>(my) +

Old.get<U>(py) +

Old.get<U>(mx) +

Old.get<U>(px) -

6.0*Old.get<U>(Cp)) +

- deltaT * Old.get<U>(Cp) * Old.get<V>(Cp) * Old.get<V>(Cp) +

- deltaT *

F * (Old.get<U>(Cp) - 1.0);

New.get<V>(Cp) = Old.get<V>(Cp) + vFactor * (

Old.get<V>(mz) +

Old.get<V>(pz) +

Old.get<V>(my) +

Old.get<V>(py) +

Old.get<V>(mx) +

Old.get<V>(px) -

6*Old.get<V>(Cp)) +

deltaT * Old.get<U>(Cp) * Old.get<V>(Cp) * Old.get<V>(Cp) +

- deltaT * (

F+K) * Old.get<V>(Cp);

++it;

}

Old.copy(New);

Old.ghost_get<U,V>();

if (i % 500 == 0)

{

count++;

}

}

std::cout <<

"Total simulation: " << tot_sim.

getwct() << std::endl;

openfpm_finalize();

}

__device__ __host__ index_type get(index_type i) const

Get the i index.

Finalize

Deinitialize the library

Finalize

Deinitialize the library